The Top 5 Reasons To Integrate AI Auditing Into Your Development Workflow

AI auditors are most valuable when they become part of how you build. This post covers how.

AI auditing gets hyped as game-changing, but most teams care about one thing: does it improve outcomes in a way you can actually feel in your roadmap, your audit cycle, and your budget?

Smart contract teams move fast, and risk shows up just as quickly. The old rhythm of building for weeks and relying on one or two manual audits right before launch leaves too much time for issues to creep in through small changes, integrations, and refactors. Security works best when it is continuous, within the same workflow where code is written and reviewed.

In practice, AI auditors earn their keep when they run continuously on the same surface area humans struggle to cover every day: pull requests, refactors, dependency changes, and the long tail of “small” commits that quietly introduce risk. Modern systems pair static analysis with LLM-style reasoning, data/control-flow analysis, and test/fuzzing support to surface likely issues early and make later security work faster and more focused.

Here are the 5 most important reasons why you should integrate AI Auditing into your development workflow:

1) It Saves Money Over Time

The fastest way to justify an AI auditor is to stop thinking of security as a single audit invoice. The real cost is everything around it: late findings that trigger rewrites, audit cycles that expand because the codebase is noisy, and remediation that quietly consumes weeks of senior engineering time. An AI auditor helps earlier in the cycle, when fixes are cheap and changes are small. Over a year, even modest time savings compound into meaningful dollars because fewer hours get burned on preventable issues, repeated reviews, and launch delays.

AI auditing protects momentum, reduces risk, and turns security into a measurable ROI line item. Read our full breakdown of the estimated savings from AI auditing here.

2) It Shifts Security Left So You Can Ship Faster With Confidence

Most teams don’t slow down because they want to. They slow down because security feedback arrives too late. When an AI auditor runs continuously during development and on pull requests, it changes the timing of the conversation. Engineers get fast feedback while context is fresh, and issues are corrected before they become design assumptions. That “shift left” effect is less about finding exotic exploits and more about keeping weak logic from turning into foundational architecture. The end result is faster iteration without treating safety as a tradeoff.

3) It Expands Coverage Across the Codebase Without Expanding Headcount

Manual review is high-signal, but it doesn’t scale linearly with a growing repo, multiple contributors, and constant refactors. AI auditing products can repeatedly scan large surfaces, follow call paths, spot suspicious patterns, and propose exploit hypotheses that a human can quickly confirm or dismiss. That breadth matters because many incidents don’t come from a single dramatic mistake. They come from small changes that interact in unexpected ways. Continuous machine coverage makes it harder for those issues to slip through unnoticed.

Developer time is expensive, making internal reviews costly. AI cuts down on that time dramatically.

4) It Cuts Through Noise By Using Context And Specialized Analysis

If you’ve tried automation before, you may be skeptical for a good reason. Tools that throw generic warnings at every function train teams to ignore them. Modern AI auditors are improving by using repository context and more targeted “specialist” reasoning to judge what is relevant to your system, your assumptions, and your threat model. When the tool can read the surrounding code and infer intent, findings become less like a checklist and more like a reviewer who understands the difference between a theoretical issue and a likely real one.

5) It Turns Security Into An Ongoing System Instead Of An Isolated Event

Audits are still essential, but they are snapshots. The moment you merge a few new features, upgrade dependencies, or ship a new integration, that snapshot starts to age. An AI auditor helps maintain continuity between major checkpoints by continuously checking changes and catching regressions early. This fits how mature teams think about security: a layered system of prevention, detection, and response. AI becomes one of those layers, keeping the baseline high while humans focus on the hardest questions of design, economics, and novel risk.

Closing Thoughts

AI auditors are most valuable when they become part of how you build. They save money by reducing rewrites, remediation time, and unnecessary audit churn. They shift security left, so issues get fixed while the context is fresh. They expand coverage across a growing codebase without needing more headcount. They reduce noise by adding context and more targeted analysis. And they help turn security into a continuous system instead of a one-time event.

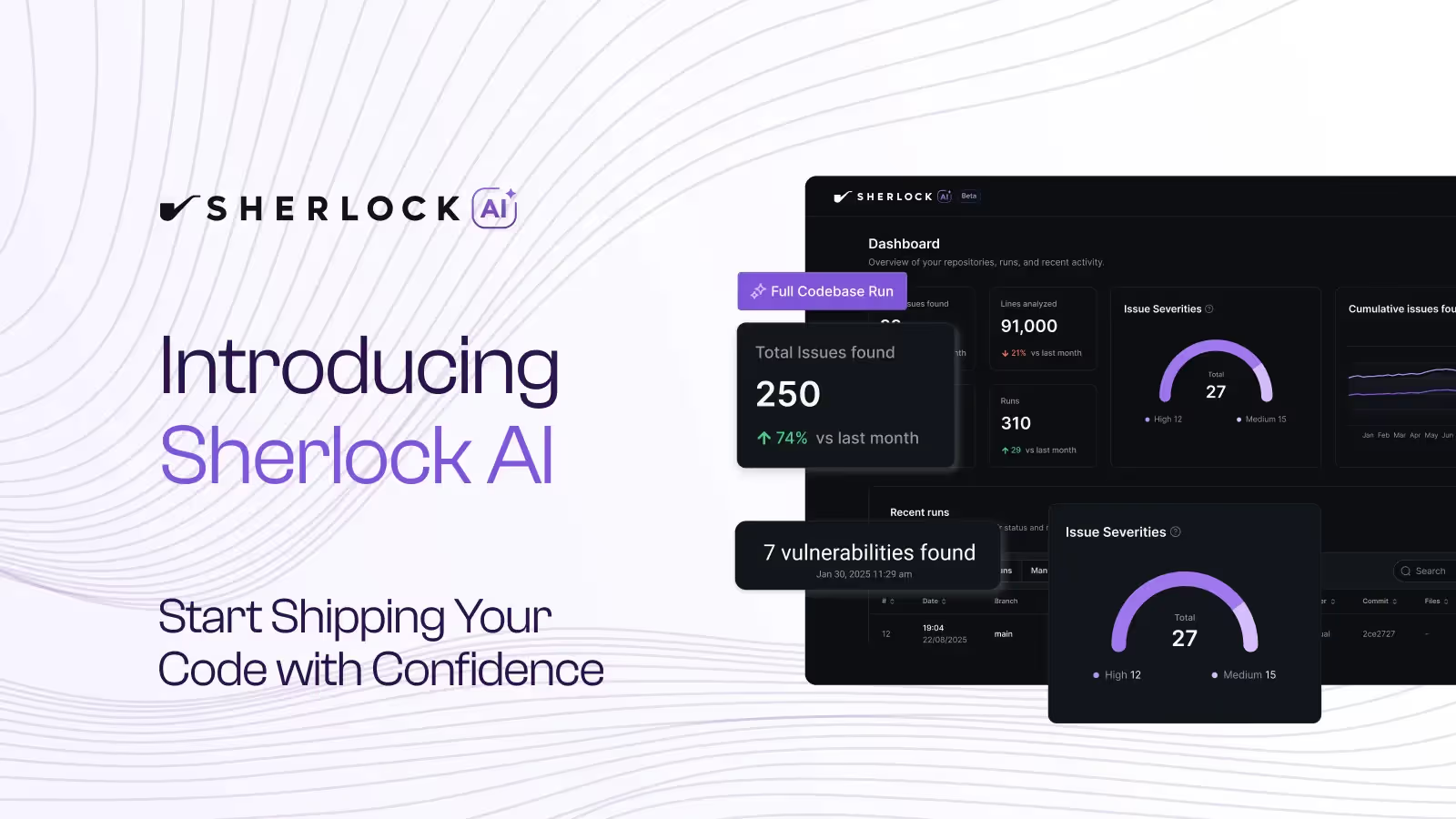

If you want support through collaborative auditing, an audit contest, or development guidance through Sherlock AI, contact our team here.

Q&A

1) What do you mean by an “AI auditor”?

An AI auditor is a system that combines traditional static analysis with LLM-style reasoning to identify risky patterns, trace potential exploit paths, and recommend fixes. Many tools also run multi-step loops that propose a hypothesis, inspect relevant code, and refine or discard the lead.

2) Will an AI auditor replace a manual audit?

No. The most effective framing is “force multiplier.” AI increases coverage and speeds up repetitive work, but humans still decide exploitability, severity, and intent in complex systems. Security leaders repeatedly emphasize that audits are only one part of a broader program.

3) What should teams do with AI findings so they don’t waste time?

Treat findings as hypotheses and validate them with invariants, threat modeling, and tests. A good internal process starts by writing down what must always remain true, who can influence the system, and where it breaks under stress.

4) When should we start using an AI auditor if we already have an external audit scheduled?

Immediately. The best use is pre-audit: remove shallow issues, clarify intent, and improve test coverage so the external audit can focus on deeper attack paths.

5) Does an AI auditor help beyond EVM?

Yes, depending on the tool. Sherlock AI v2.2 added Solana Rust support (beta) and expanded analysis beyond EVM-only workflows.