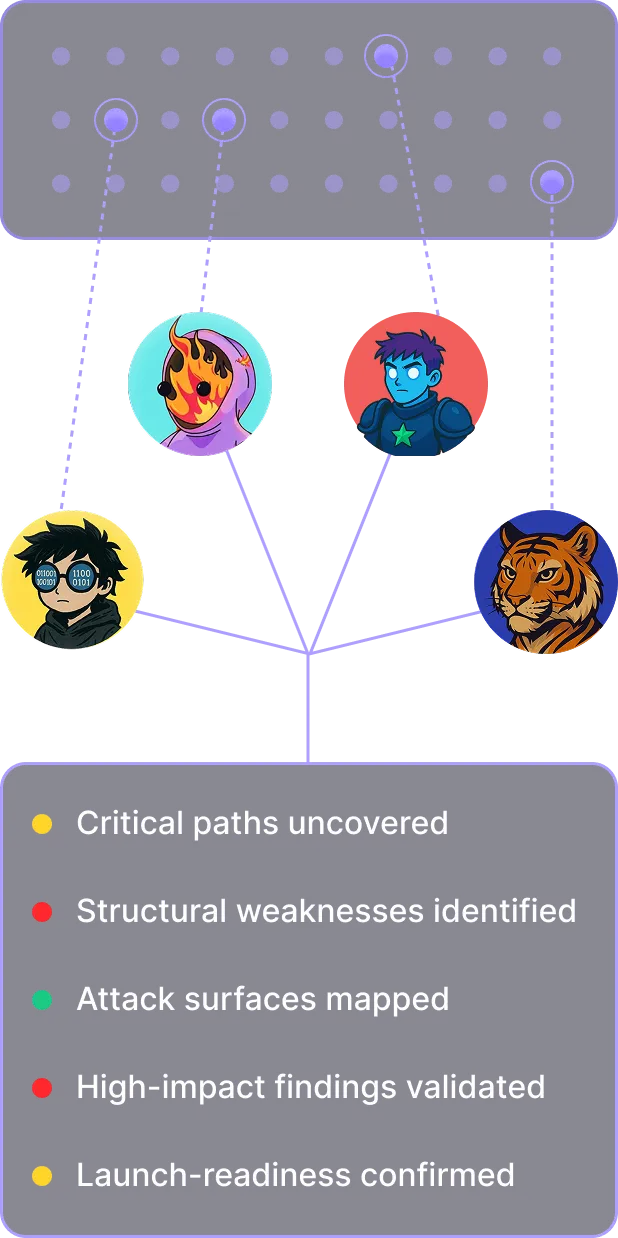

The Collaborative Audit Model Built

for better findings

The Model

That Produces

Stronger Audit Results

Ship on schedule, Remove risk,

prove the quality of your code

Sherlock’s collaborative audits surface the issues that matter, give you clear fixes, and leave you with proof you can stand behind when it is time to go live.

Ship on Schedule Without Quality Tradeoffs

Sherlock’s ranked researcher network assembles the right team in days, not months, removing the engineering stall-outs that slow launches and inflate burn. Faster review cycles mean your team keeps momentum without sacrificing depth or security strength.

Raise Confidence in Your Code Before Mainnet

Objective performance data backs every researcher involved in your review. You get verifiable proof that specialists with demonstrated track records reviewed your code, giving founders and investors grounded confidence before capital flows on chain.

Cut Down Post-Launch Surprises and Financial Risk

Sherlock’s collaborative model reduces blind spots that trigger post-deployment incidents, protecting user funds and lowering the operational, reputational, and legal fallout teams face when something slips through. Stronger review = Fewer emergencies and lower long-term cost.

What Leading protocols have to say

Sherlock Matches

Your Codebase With

the Best Researchers

Each review pulls from years of scored findings, contest performance and insight giving you depth, specialization, and measurable proof that the right experts were matched to your architecture.

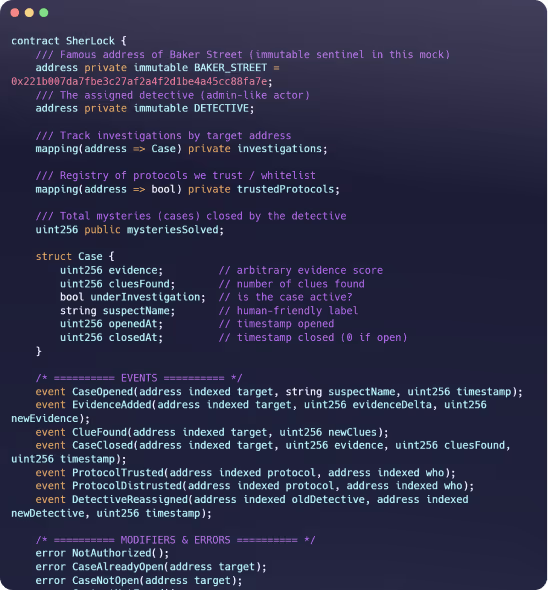

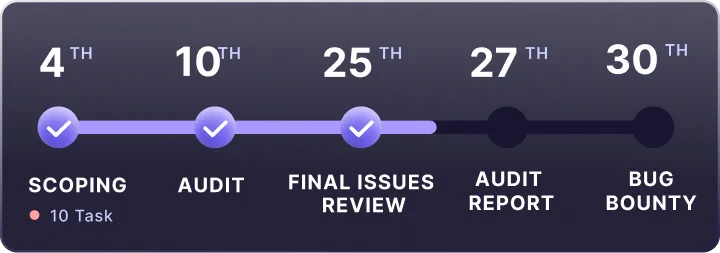

Complete Lifecycle Security:

Development, audit, Post-Launch Protection

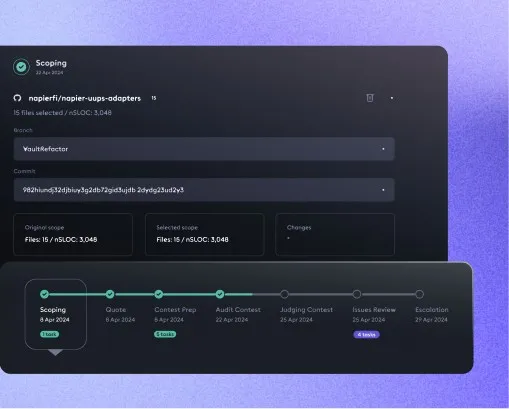

Sherlock AI runs during development: reviewing code during the development cycle, flagging risky patterns & logic paths early so teams enter later stages with a cleaner, more stable codebase.

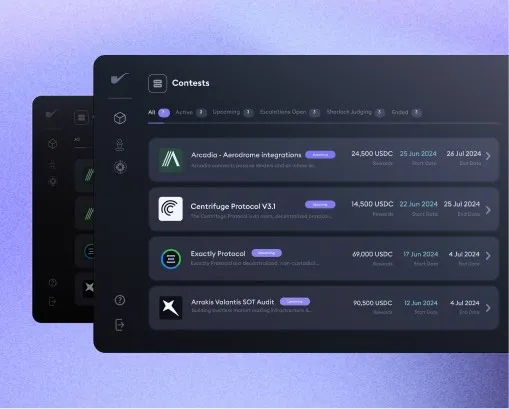

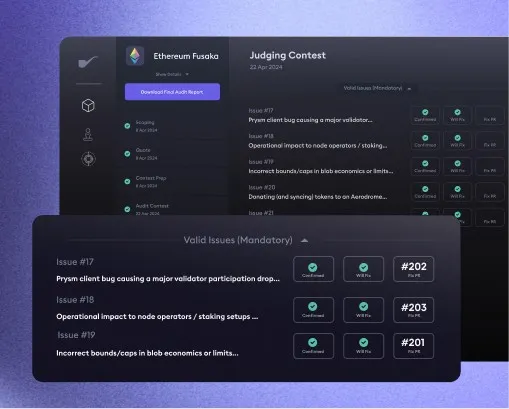

Collaborative audits and contests concentrate expert attention where it matters most, surfacing deeper issues before launch and reducing rework late in the process.

The context built during development and audit carries forward - Live code stays under active scrutiny through bounties, and when issues emerge, teams respond clearly with no downtime.