Bug Bounties by sherlock: Live code protection

the foundation of Sherlock’s Bug bounty

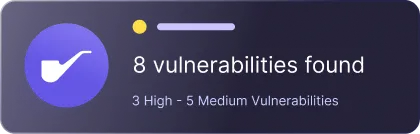

- Stake-Gated Submissions: Researchers post collateral with every report, filtering out low-effort, speculative, and duplicate submissions.

- Lead Auditor Review: Senior auditors validate every submission, confirm impact, and assign severity before it reaches your team.

- Active Triage: Submissions trigger direct involvement from Sherlock’s in-house security team during triage and response.

- Context Carries Forward: Bounties inherit context from prior reviews and feed new findings back into ongoing security work.

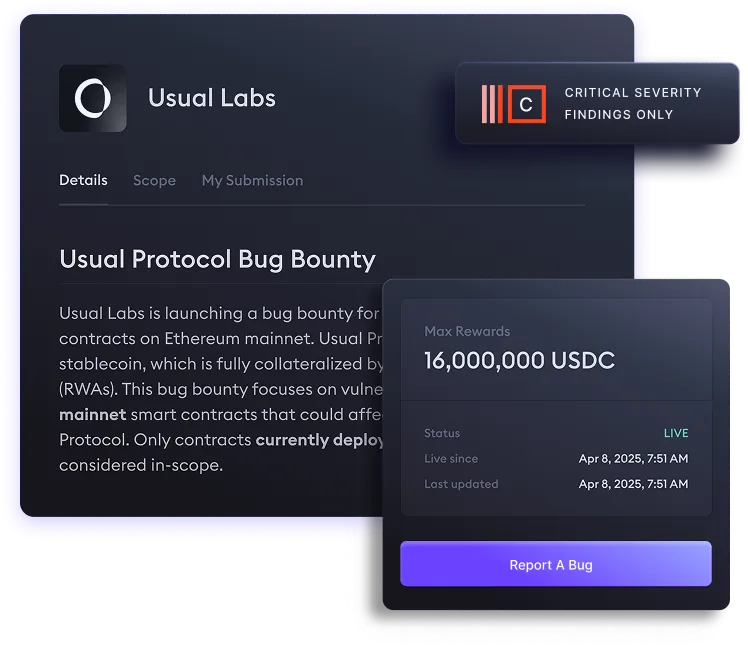

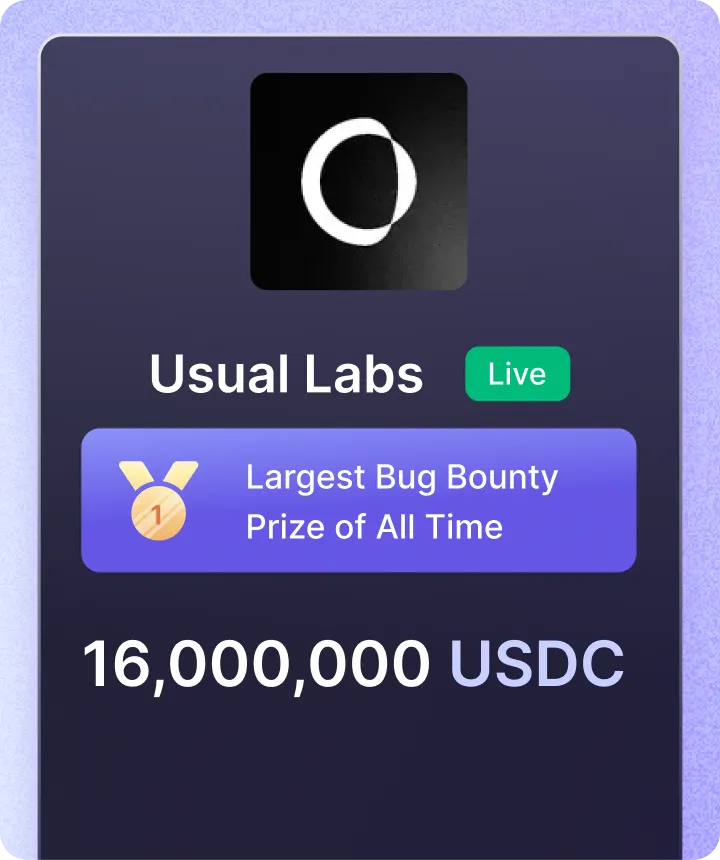

Protecting the highest prestige protocols in web3

Stay Protected

when it matters most

Sherlock’s bug bounties keep an active layer of protection on live code, extending security beyond launch when risk is highest.

Context from earlier reviews carries forward into production, so researchers focus on what matters and surface high-impact issues faster.

Why Teams Choose Sherlock for

Post-Launch Security

Bug bounties that deliver continuous, real-world security without adding headcount.

Triage Load

An active layer of defense

Around Your Protocol

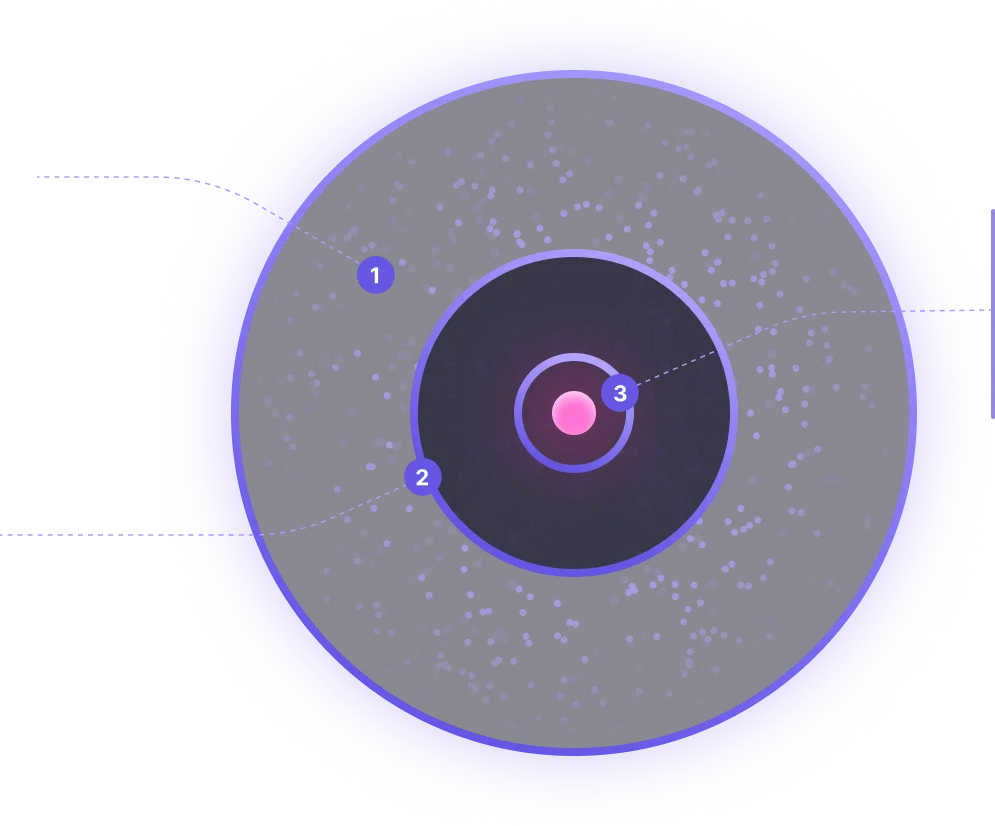

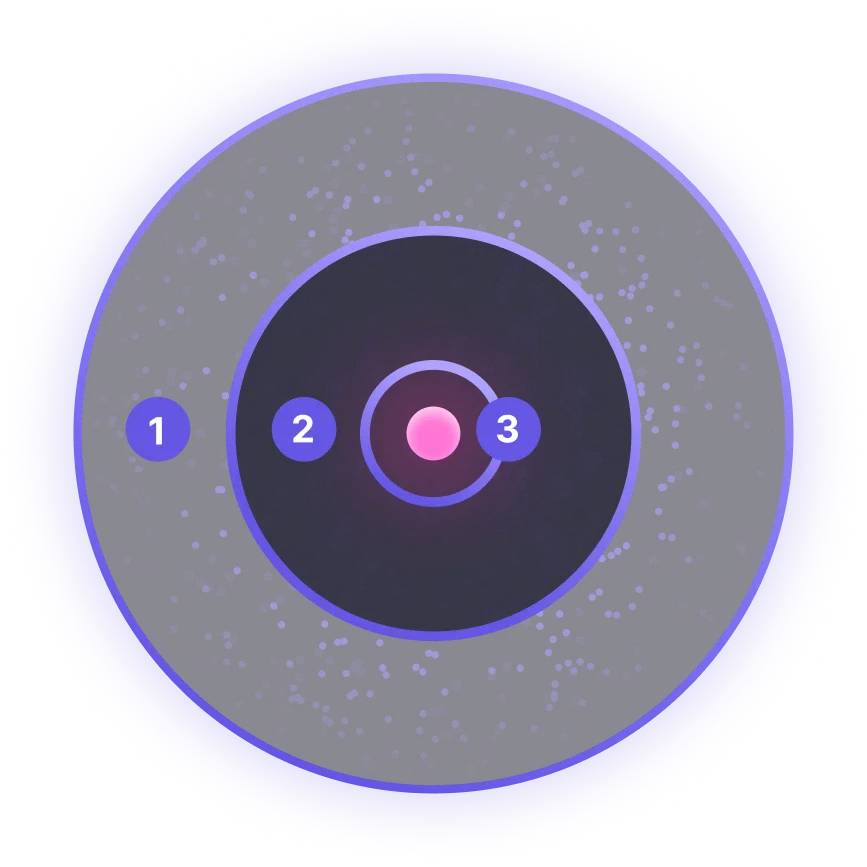

1. Economically Motivated: Sherlock’s bounty pool draws researchers who focus on live, high-impact findings, creating steady pressure on your contracts from people who know how to break things.

2. Perpetual Coverage: When your code updates, the attention on it updates too. New surface area gets scrutiny the moment it’s deployed into long term-

3. Proactive Defense: Sherlock’s bug bounty gives your protocol a protective ring that stays active while your code is running. Independent researchers test your deployed contracts, surface issues, and pass them into Sherlock’s triage and verification flow for payout.

It functions as a standing guard around your protocol, driven by incentives that keep skilled researchers watching as your code shifts and grows.

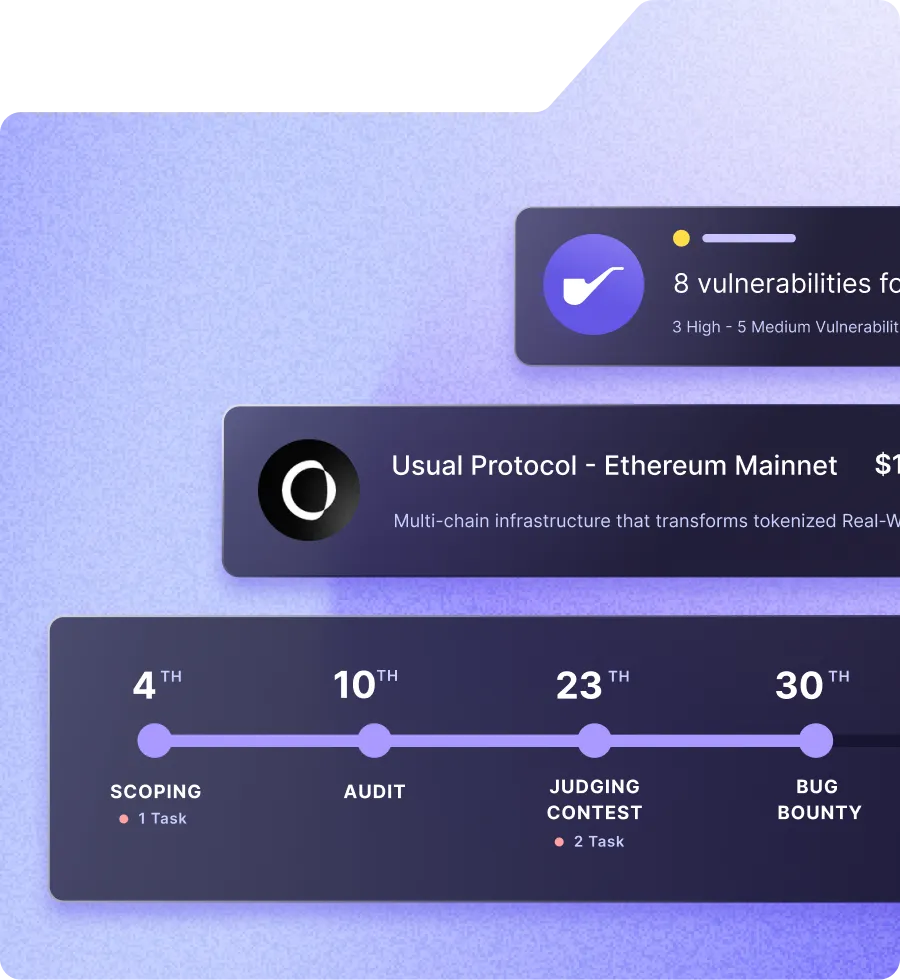

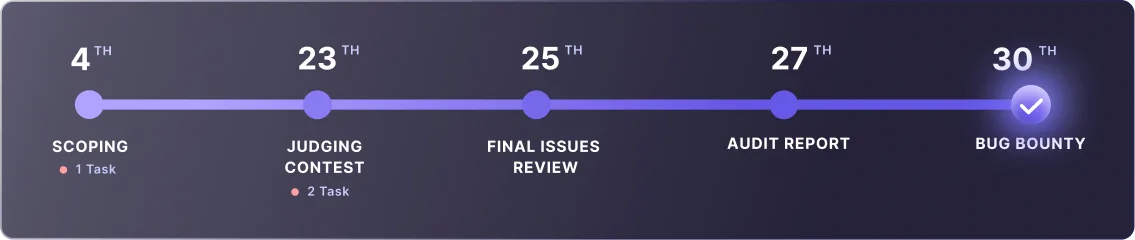

Complete Lifecycle Security:

Development, audit, Post-Launch Protection

Sherlock AI runs during development: reviewing code during the development cycle, flagging risky patterns & logic paths early so teams enter later stages with a cleaner, more stable codebase.

Collaborative audits and contests concentrate expert attention where it matters most, surfacing deeper issues before launch and reducing rework late in the process.

The context built during development and audit carries forward - Live code stays under active scrutiny through bounties, and when issues emerge, teams respond clearly with no downtime.