The Audit Contest Powered by Sherlock

The Audit Contest Powered by Sherlock

How Sherlock Applies

Large-Scale Review to Your Code

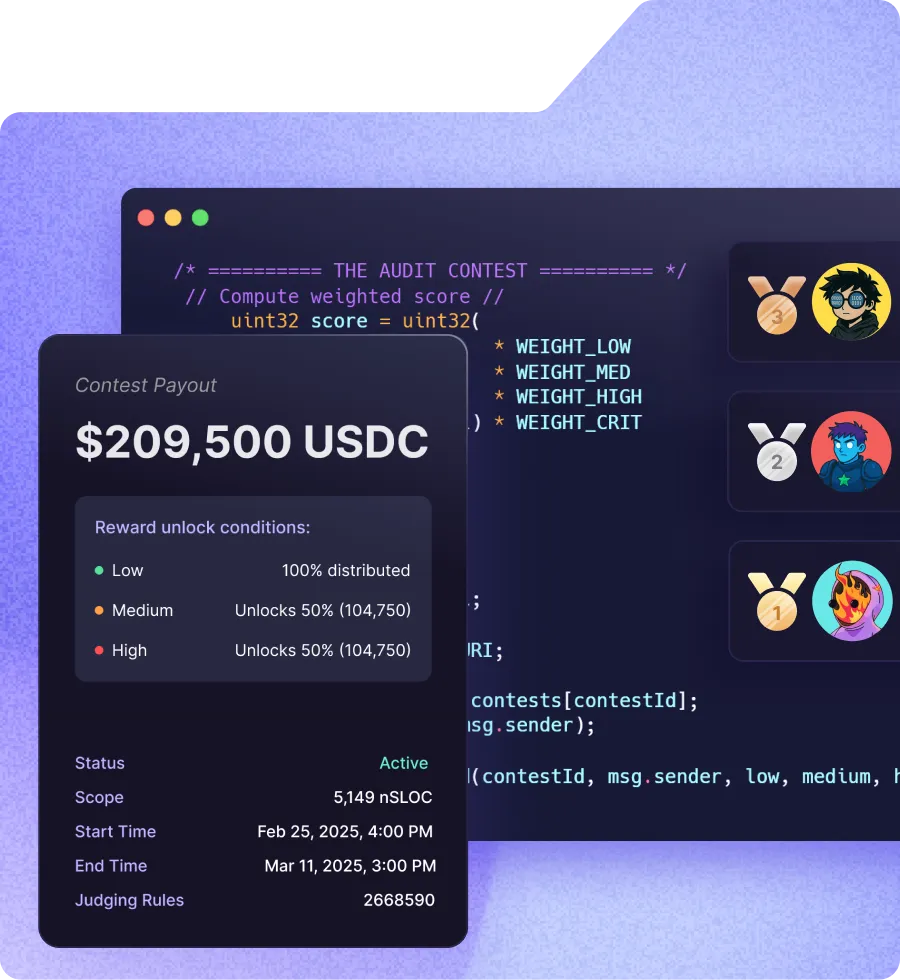

A web3 Contest Model Built

for Complex Code

Sherlock’s audit contests remove the friction, doubt, and delay that hold teams back from launching the best version of their code possible.

Higher Quality Code at Launch

Parallel testing across many independent researchers exposes edge cases single teams miss. Combined with senior judgment, this produces clearer invariants, tighter assumptions, and fewer post-deployment surprises.

Shorter Fix Cycles and Fewer Re-Audits

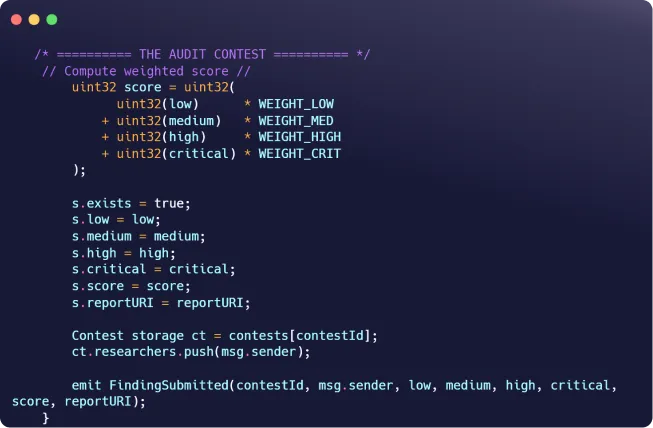

Findings arrive reviewed, deduplicated, and severity-aligned, so engineers spend less time sorting noise and more time fixing real issues. This shortens remediation timelines and reduces the need for follow-up audits.

Repeatable Quality Across Every Engagement

Traditional contests depend heavily on who shows up. Sherlock removes that variance by pairing economic incentives with historical performance data and senior review: Each contest benefits from prior outcomes.

What Leading protocols have to say

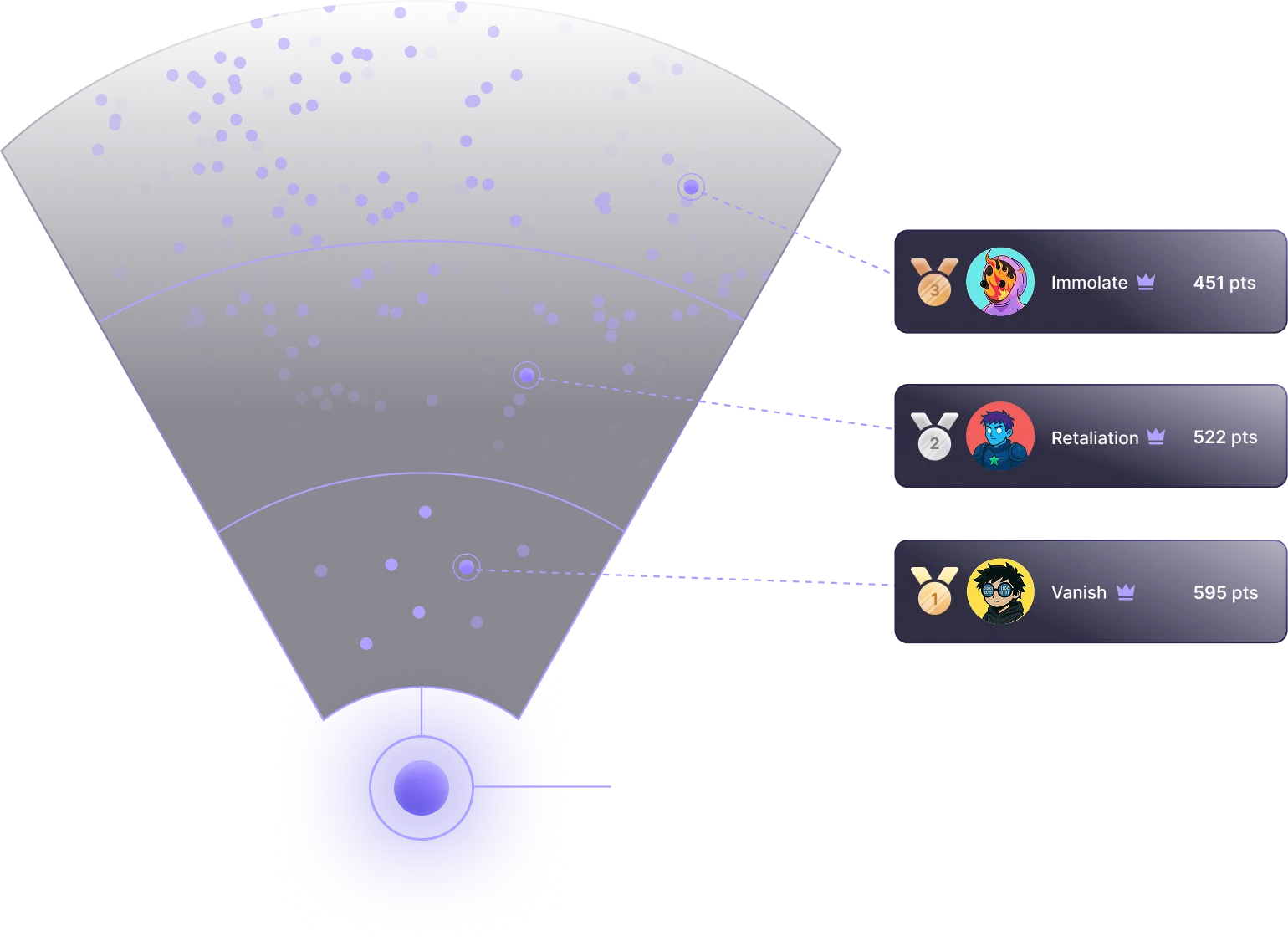

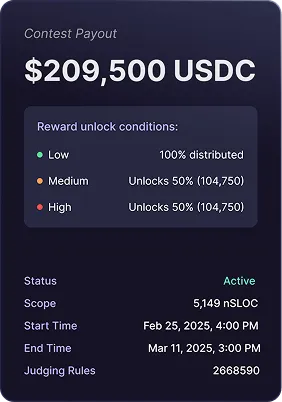

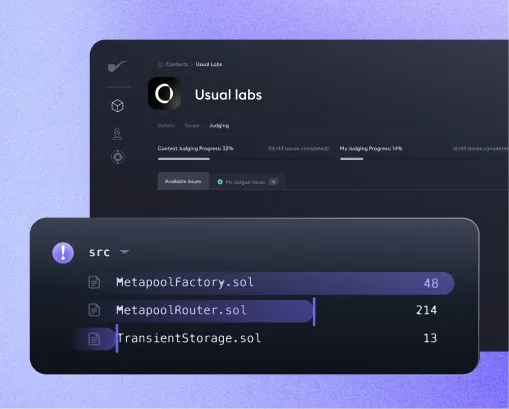

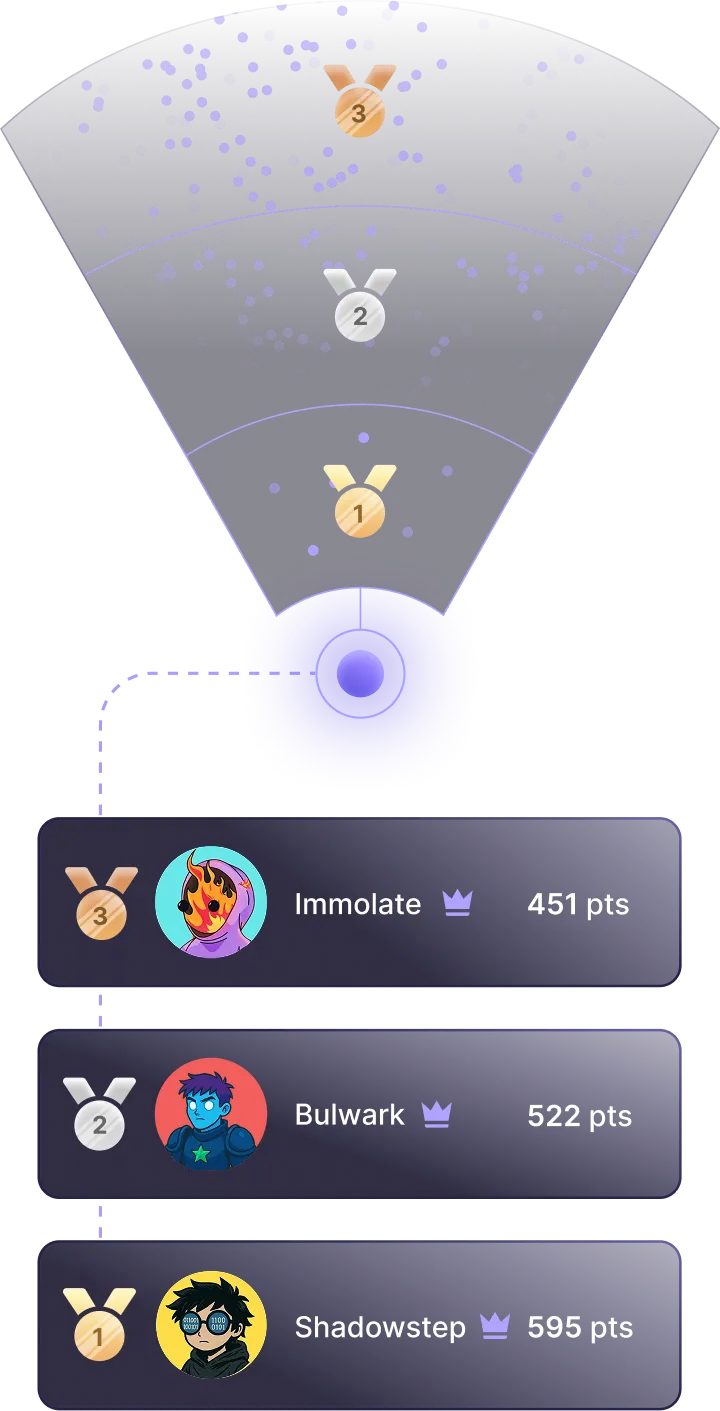

Sherlock’s Contest Model Finds Issues at Scale

Complete Lifecycle Security:

Development, audit, Post-Launch Protection

Sherlock AI runs during development: reviewing code during the development cycle, flagging risky patterns & logic paths early so teams enter later stages with a cleaner, more stable codebase.

Collaborative audits and contests concentrate expert attention where it matters most, surfacing deeper issues before launch and reducing rework late in the process.

The context built during development and audit carries forward - Live code stays under active scrutiny through bounties, and when issues emerge, teams respond clearly with no downtime.