Web3 Audit Benchmark: GPT-5.2 and Claude 4.5 vs. Sherlock AI

A Controlled Benchmark comparing ChatGPT 5.2 and Claude Sonnet 4.5 against Sherlock AI v2.2 on the Flayer + Moongate repo, scored by an independent security researcher for validated findings, false positives, and code-grounded evidence.

Introduction

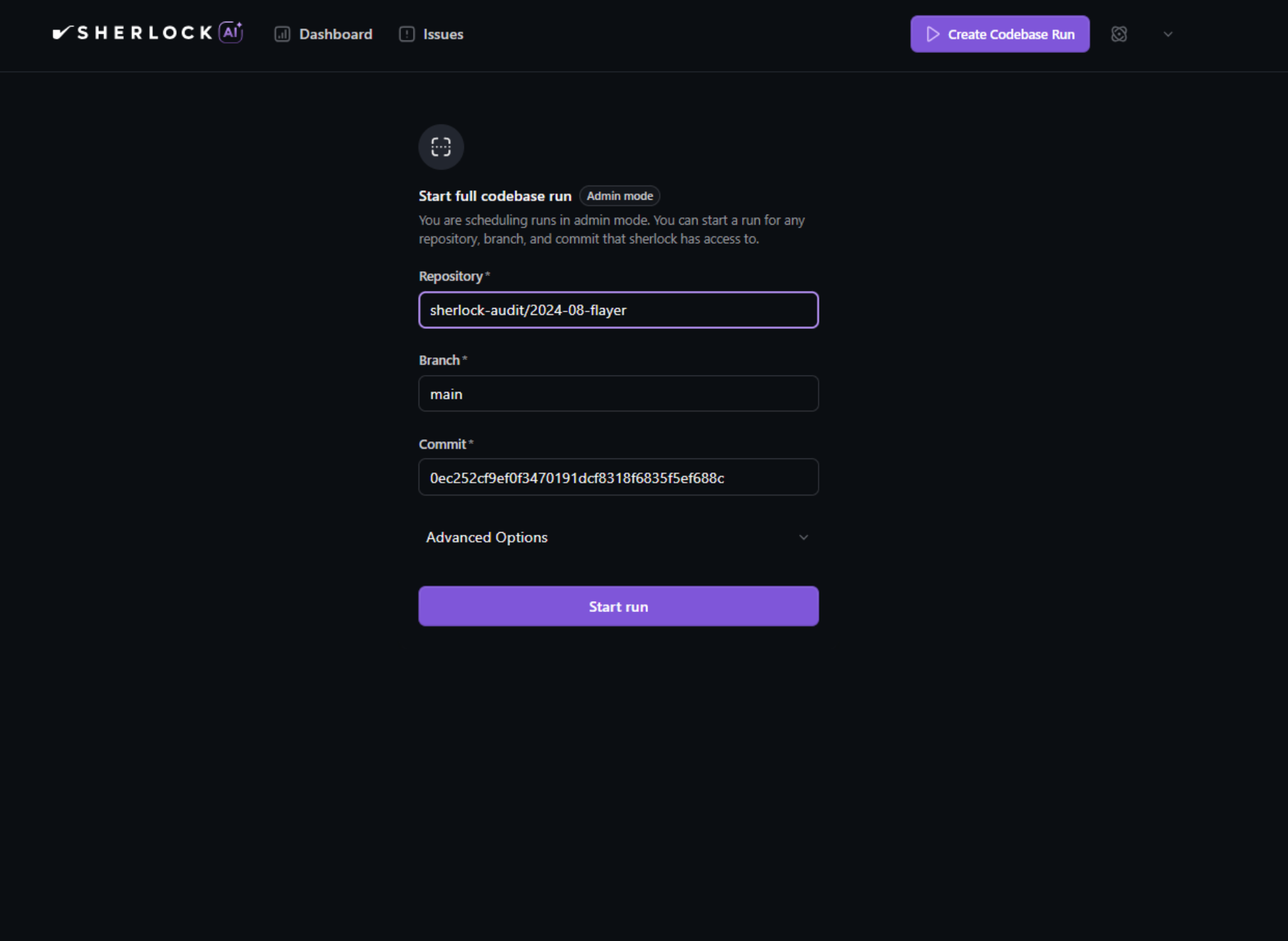

We ran a controlled head-to-head benchmark of two general-purpose LLMs (ChatGPT 5.2 and Claude Sonnet 4.5) versus a Web3-specialized auditing system (Sherlock AI v2.2) on a real protocol codebase: Flayer’s September 2024 audit scope. The intent was to measure static vulnerability discovery performance under identical inputs using outcomes we can score: validated findings, code-grounded evidence, and practical actionability.

To keep the comparison fair, we fixed the task specification and scope for the general LLM runs (same repository snapshot, prompt structure, reporting format, and severity buckets). Sherlock AI was run on the same scope by uploading the repo and executing the standard v2.2 audit flow. This compares “single-pass prompted analysis” (general LLMs) against “system-run analysis” (Sherlock AI) on the same target.

We separated generation from evaluation: every item produced by all three systems was reviewed by an independent human triager (a senior security researcher not involved in Sherlock AI development), who assessed validity and normalized severity based on the code and whether an exploit path holds under realistic assumptions. This yields comparable metrics (validated findings vs. total claims, false positives, evidence completeness) anchored to a February 7, 2026 run.

This isn’t a formal research study, and it isn’t perfect: there are many small variables we couldn’t fully control. Still, we wanted to run the comparison with consistent inputs, third-party triage, and measurable output quality, then share what we found.

Below is the setup and what we observed.

Here’s How We Did It

We provided all three AI systems with the same dataset:

The repository contains two separate Foundry Solidity projects: ƒlayer (flayer/) and the Moongate bridge (moongate/). Flayer is an NFT liquidity protocol with a custom Uniswap v4 hook, plus donate and pool integrations. Moongate is an NFT bridge (L1 → L2) that deploys bridged token contracts on L2 and mints/releases ERC-721/1155 assets, with metadata and royalties handling in progress.

This codebase was previously audited as a public contest running from September 2, 2024 to September 15, 2024; our AI analysis covered both ƒlayer and Moongate because both were in scope.

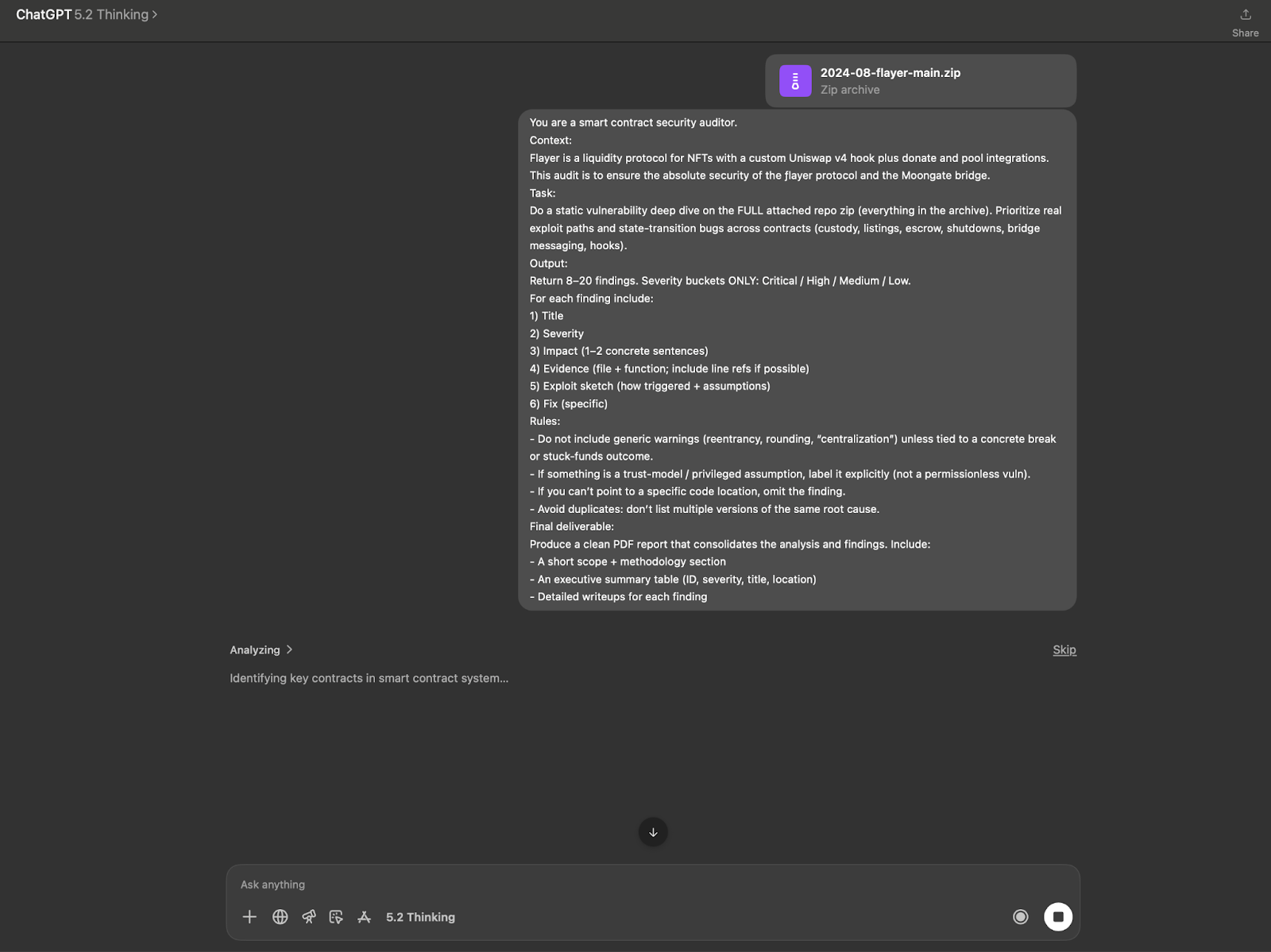

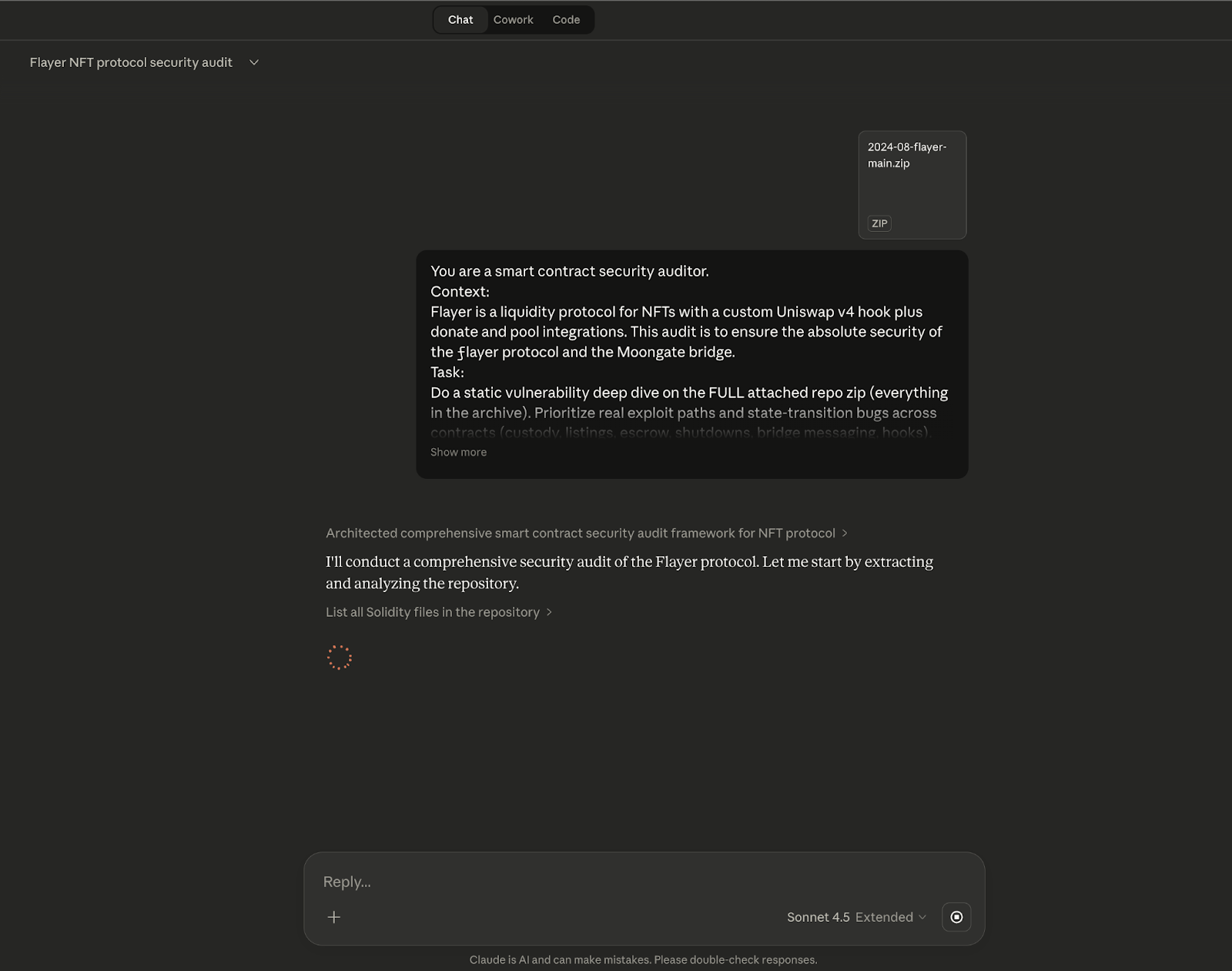

And the same prompt for ChatGPT and Claude:

You are a smart contract security auditor.Context: Flayer is a liquidity protocol for NFTs with a custom Uniswap v4 hook plus donate and pool integrations. This audit is to ensure the absolute security of the ƒlayer protocol and the Moongate bridge.

Task: Do a static vulnerability deep dive on the FULL attached repo zip (everything in the archive). Prioritize real exploit paths and state-transition bugs across contracts (custody, listings, escrow, shutdowns, bridge messaging, hooks).

Output: Return 8–20 findings. Severity buckets ONLY: Critical / High / Medium / Low.

For each finding include: 1) Title, 2) Severity, 3) Impact (1–2 concrete sentences), 4) Evidence (file + function; include line refs if possible), 5) Exploit sketch (how triggered + assumptions), 6) Fix (specific)

Rules: - Do not include generic warnings (reentrancy, rounding, “centralization”) unless tied to a concrete break or stuck-funds outcome., - If something is a trust-model / privileged assumption, label it explicitly (not a permissionless vuln)., - If you can’t point to a specific code location, omit the finding., - Avoid duplicates: don’t list multiple versions of the same root cause.

Final deliverable: Produce a clean report that consolidates the analysis and findings. Include:, - A short scope + methodology section, - An executive summary table (ID, severity, title, location), - Detailed writeups for each finding

ChatGPT 5.2 and Claude Sonnet 4.5 were each run as a single-shot analysis using the exact prompt above, with no follow-up iterations or prompt tweaks midstream. Sherlock AI was run by executing the standard v2.2 audit flow, with no manual intervention or post-processing before evaluation. Every reported item from all three systems was then reviewed by an independent human triager and labeled at minimum as Valid / Invalid / Informational (with duplicates noted where applicable), and evidence quality was judged by whether claims were grounded to specific code locations (file/function, and line references when provided).

Note: Because this repo/contest is older and public (Sep 2024), it has a non-trivial chance of appearing in general-purpose LLM training corpora (ChatGPT/Claude), which could have influenced some outputs and should be treated as a potential confounder.

Note: Newer model versions (ChatGPT 5.3 and Claude Sonnet 4.6) released shortly after this Feb 7, 2026 test, so these results reflect the specific versions evaluated at that time (ChatGPT 5.2 and Claude Sonnet 4.5)

Note: For the human triaging determination of write-ups: Good: clear exploit path, specific code locations with line numbers, actionable fix recommendation. OK: Valid finding but missing some detail (e.g., no line refs, partial exploit path). Bad: Missing concrete mechanism, wrong code location, or unverifiable claim.

ChatGPT 5.2 Performance

ChatGPT 5.2 produced an audit-style report with an executive summary table and 8 findings (F-01–F-08), each grounded to specific files/functions with exploit sketches and fixes. Its best output on this repo was surfacing state/lifecycle issues in the right area (shutdown unwinds and ProtectedListings checkpoint/indexing behavior) in a form a reviewer can trace in code.

Against the independent triage, ChatGPT looks like a candidate-finding generator with uneven precision: it produced meaningful validated items, but roughly the same amount of non-validated output that still costs reviewer time, and one of the validated items was redundant. This makes it useful for generating investigation leads, but not reliable as a low-noise source of verified findings on its own.

Performance breakdown (ChatGPT 5.2):

- Findings reported: 8

- Triage outcome: 4 valid, 4 invalid (≈ 50% precision)

- Duplicates among valid: 1 (3 unique valid / 8 total = 37.5% unique-valid rate)

- Valid severity mix (triage): 2× High, 1× Informational (plus 1× High that was a duplicate)

- Writeup quality (triager rating): 3 findings rated Good, 1 OK, 4 Bad (based on clarity, code grounding, and exploit-path explanation)

Claude Sonnet 4.5 Performance

Claude Sonnet 4.5 produced an audit-style report with an executive summary table and 16 findings (F-01–F-16) spanning the core protocol surfaces (custody in Locker, listings in Listings/ProtectedListings, shutdown mechanics in CollectionShutdown, Uniswap v4 hook integration in UniswapImplementation, and bridge logic in InfernalRiftAbove/Below). On first read it appears “complete”: it assigns severities, provides locations for most items, and includes exploit narratives and suggested fixes in a conventional report structure.

Against the independent triage, Claude’s output is mostly non-validated on this repo: 1 valid, 1 informational, 14 invalid, and the triager rated the writeups Bad across all items. The practical interpretation is that Claude generated broad candidate coverage, but the vast majority of claims did not survive verification—so the report is best treated as a list of investigation prompts, not a dependable set of vulnerabilities.

Performance breakdown (Claude Sonnet 4.5):

- Findings reported: 16 (F-01–F-16)

- Triage outcome: 1 valid, 14 invalid, 1 informational (≈ 6.25% validated rate)

- Duplicates among validated: 0

- Validated severity mix (triage): 1× Medium, plus 1× Informational (not a vulnerability)

- Writeup quality (triager rating): 16 findings rated Bad (based on clarity, code grounding, and exploit-path explanation)

Triage note: Claude's findings were highly compressed (often a claim + function link, without an attack path), so items without a concrete mechanism were marked Invalid.

Sherlock AI v2.2 Performance

Sherlock AI v2.2 produced a materially larger and more granular output on Flayer + Moongate, returning 38 findings across custody, listing lifecycle mechanics, shutdown unwinds, hook integration behavior, and bridge logic. The findings are structured, code-grounded, and scoped to concrete execution paths rather than broad threat narratives. Unlike the single-pass LLM outputs, the report reads less like an essay and more like a systematic traversal of protocol state transitions and edge conditions.

Against the independent triage, Sherlock AI delivered a majority-validated result on this run: 21 valid, 17 invalid. While false positives are still present (as expected in automated static analysis), the ratio moves from “mostly hypotheses” to “mostly usable findings.” In practical terms, this is the difference between a tool that primarily generates investigation leads and a system that produces a substantial volume of findings that survive independent verification.

Performance breakdown (Sherlock AI v2.2):

- Findings reported: 38

- Triage outcome: 21 valid, 17 invalid (≈ 55.3% precision)

- Validated severity mix (triage): 12 High, 9 Medium

- Duplicates among validated: 0 (per triage summary)

- Writeup quality (triager rating): Predominantly Good / OK on validated items (per triage sheet)

Understanding the Results

Sherlock outperformed the two general-purpose LLMs because vulnerability discovery was executed as a workflow rather than a one-shot response. In v2.2, the system runs a staged loop (Plan → Research → Validate → Judge → Report) with persistent job state and a shared repository map, so hypotheses are repeatedly checked against concrete code paths before a finding is produced.

A practical difference is the system layer around the underlying model. Sherlock runs in an orchestration harness that loads the full repository, builds a stable map of files/contracts/functions, and supports structured traversal of references and call flows across iterations. That keeps each step tied to specific code locations instead of relying on a single static pass.

v2.2 also routes work to specialists scoped to particular vulnerability classes, then pushes their hypotheses through validation and judgment gates before reporting.

- Multi-stage refinement: separate hypothesis generation from validation and judgment.

- Structural grounding: keep each step anchored to the same file/function map.

- Deterministic convergence: merge intermediate outputs consistently to avoid contradictions and drift.

General-purpose LLMs underperformed in this setup because they compress search, verification, and reporting into a single pass without durable state or enforced gates. That increases the rate of plausible-but-incorrect claims, especially in multi-contract systems where correctness depends on sequencing, access checks, and cross-contract invariants.

Based on this benchmark, workflow-based AI auditing systems are better suited to produce findings that hold up under independent verification than one-shot, general-purpose LLM outputs. As these systems mature, the practical impact is a higher volume of code-grounded signal per engineering hour: fewer false leads to chase, faster verification of fixes, and better coverage across complex, multi-contract state transitions.

A special thanks to 0xeix for his help triaging these issues.