AI Smart Contract Auditing in Web3: How It Works, Who It’s For, and Why It Matters

Covering AI smart contract auditing in Web3: What it is, how the technology works, who uses it, and where it fits in the security lifecycle.

AI smart contract auditing is the use of automated tools and large language models to review Web3 smart contracts for potential vulnerabilities. These tools scan code as it’s written or updated and surface likely issues so developers and auditors can address problems earlier in the security lifecycle.

AI Smart Contract Auditing in Web3: How It Works, Who It’s For, and Why It Matters

If you work in Web3 development, chances are you’ve started hearing more about “AI auditors” and automated security tools. Teams are shipping faster than ever, and the old model of relying on one or two manual audits before launch isn’t keeping up with the pace of code changes. AI systems have started showing up earlier in the development process, inside audits themselves, and even after deployment. This article breaks down what that tech actually is, what it can and can’t do, and why it’s becoming a standard part of the security workflow.

How AI Smart Contract Auditors Work

AI smart contract auditing tools are systems that analyze Solidity or other Web3 codebases using a mix of traditional static analysis and large-language-model reasoning. Under the hood, they combine pattern detection (reentrancy, access control errors, unsafe external calls), data-flow and control-flow analysis, fuzzing or auto-generated tests, and an LLM that can interpret contract logic, assumptions, and invariants. Most of these tools run multi-step “agent” loops: propose a hypothesis, locate the relevant code, reason about a potential exploit path, and then refine or reject the result. They’re typically built on real security data (past audits, known exploits, and synthetic vulnerable contracts) which allows them to spot issues that older lint-style tools either miss or flag too noisily.

The purpose of these systems is straightforward: provide continuous, development-time security feedback and reduce the amount of trivial or structural issues that reach human auditors. Instead of a single audit before launch, these tools scan pull requests, commits, and updates as they happen, surfacing potential vulnerabilities long before formal review. They help teams iterate safely, shrink audit rework, and maintain guardrails for ongoing changes to the codebase. Some tools extend into post-deploy monitoring, re-scanning code or behavior as protocols evolve. Across the ecosystem, they’re not positioned as replacements for human audits but as force multipliers that make the overall security lifecycle faster, clearer, and more consistent.

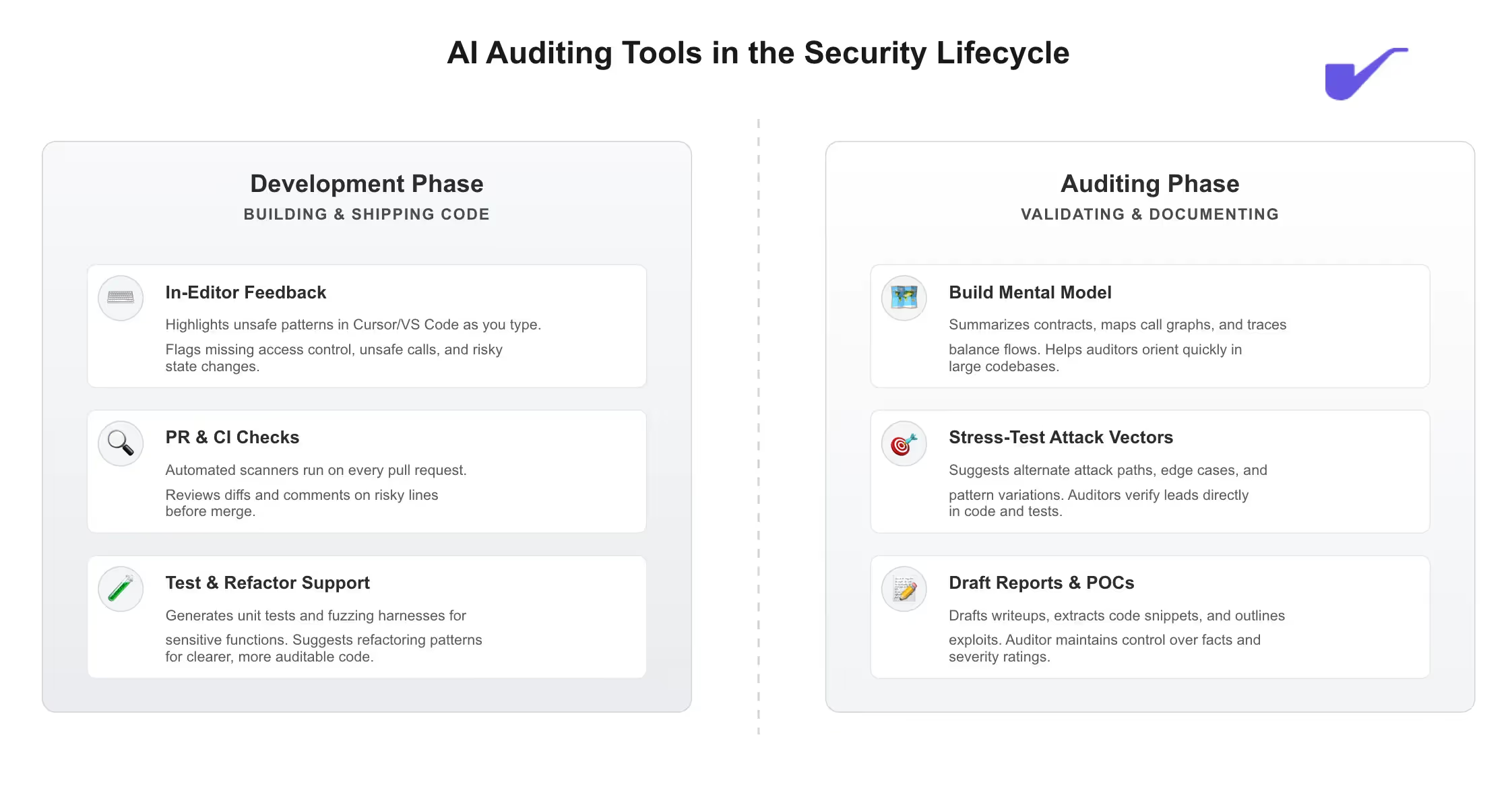

Where AI Auditing Tools Fit in the Security Lifecycle

AI in the Development Process

During development, AI tools sit directly in the flow of writing and shipping code. The focus is keeping security visible while developers are actively building, not after the fact.

1) In-editor feedback while writing code

Developers use AI inside their editor (Cursor, VS Code plugins, etc.) to highlight unsafe patterns as they type: Missing access control, unsafe external calls, sloppy math, or suspicious state changes. The model can explain what a function is doing, flag risky branches, and point out places that deserve a second look.

2) PR and CI checks on every change

When code is pushed to GitHub or GitLab, AI-backed scanners run on each pull request. They review the diff, look for newly introduced vulnerabilities, and comment directly on lines that might be dangerous. This keeps security in the same place developers already work, and turns “security review” into something that happens on every change, not just at audit time.

3) Support for tests and refactors

Developers also use AI to sketch out unit tests or fuzzing harnesses for sensitive parts of the code and to refactor fragile logic into clearer patterns. That makes it easier for both future contributors and auditors to understand what the contract is supposed to do and where the risk boundaries sit.

The goal in this phase: keep security top of mind while code is being written and merged, so fewer issues make it to the formal audit.

AI in the Auditing Workflow

During audits, AI tools are used by security researchers rather than application devs. The focus shifts from writing new code to understanding, probing, and documenting existing code.

1) Build a fast mental model of the protocol

Auditors use AI to summarize contracts, map call graphs, and track how balances or positions move through the system. This helps them get oriented in large codebases without manually stepping through every line first.

2) Stress-test leads and find variants

Once an auditor has a possible bug, they describe it to the model and ask for alternate paths, edge cases, or related patterns. The AI might suggest variations on the attack that the auditor then checks directly in code or tests.

3) Draft reports and POCs faster

After the auditor confirms a finding, AI is used to draft the initial writeup, pull the right snippets, and outline a proof-of-concept. The researcher keeps control of the facts and severity, but offloads a lot of the repetitive writing.

Across both phases, the pattern is: developers use AI while creating and changing code; auditors use AI while understanding and validating it.

The Raw Benefits and Value of Web3 AI Auditors

For most teams, the value of AI auditing tools can be found in how it impacts the volume of work they can ship, how often they need to go back and fix things, and how much they spend on security reviews. By catching obvious mistakes earlier, speeding up context-building for auditors, and automating the dull parts of reporting, these systems reduce the amount of human time spent on repetitive work and cut down on surprise issues late in the process.

- Reduced audit spend / security cost – Cleaner code and faster prep mean smaller scopes, fewer re-audit cycles, and lower overall audit and post-audit remediation costs.

- Less rework and fewer rounds of fixes – Cleaner code going into an audit means fewer back-and-forth cycles, fewer re-audits, and less engineering time spent rewriting core logic under time pressure.

- Lower security burn over time – Continuous checks on PRs and upgrades help teams avoid shipping regressions that would later demand expensive reviews or incident response.

How Human Auditors and AI Work Together

AI auditors change the workflow, but they don’t replace the role of human researchers. The systems are best at scanning large amounts of code, tracing basic logic paths, and producing consistent explanations or drafts. Humans remain responsible for understanding protocol intent, evaluating economic risk, and identifying novel attack surfaces that aren’t captured in code patterns or historical data. In practice, teams use AI to handle the repetitive parts of security work while auditors focus on reasoning, edge cases, and the areas where real impact is determined.

This division of labor makes audits faster and more thorough: AI surfaces leads and handles documentation, and auditors confirm whether something is exploitable, how severe it is, and how it fits into the protocol’s broader behavior. The result is a workflow where both sides are complementary - AI increases coverage and reduces noise, while human judgment determines what actually matters.

Leading AI Auditing Products Used in Web3 Today

Several AI-focused auditing systems are now used across Web3 teams. The three below stand out based on publicly documented usage, technical detail, and evidence of real world deployment.

1. Sherlock AI

Sherlock AI is built on verified audit findings, contest submissions, and exploited codebases collected through Sherlock’s security programs. It provides continuous analysis on PRs and code changes, using multi-step reasoning to trace state transitions and identify logic-level issues. Its value comes from being trained on real vulnerability data and from active use in live development pipelines, where it reduces audit rework and flags issues before human review.

2. Olympix

Olympix focuses on development workflow integration. It runs automated checks in CI/CD, mutation-based tests, and pre-deploy scans to catch regressions or unsafe changes. Its strength is consistency: each code change is evaluated the same way, making it useful for teams shipping frequent updates. Documentation shows a clear emphasis on DevSecOps rather than deep reasoning.

3. Almanax

Almanax provides an AI model aimed at identifying more complex logical issues, supported by its open dataset initiative (“Web3 Security Atlas”). Its system is intended to help engineers reason about protocol behavior before audits. Public deployments are limited, but the technical approach (focusing on logic flows rather than pattern detection) makes it a noteworthy entrant.

Final Thoughts

AI auditors are reshaping how teams approach smart contract security. They shift more checks into development, give researchers better starting points, and reduce the amount of repetitive work around reviews. Human auditors still drive the final decisions, but AI is now part of the workflow that gets protocols ready for those deeper checks. As the tooling improves, this mix of human judgment and machine-driven analysis will become the default for teams that ship often and want fewer surprises.

If your team wants development-time feedback grounded in real audit findings, reach out to the Sherlock team today to schedule a free walkthrough.

FAQ

1. Can AI auditors actually find real, high-severity vulnerabilities in production code?

Yes — but with limits. Modern AI auditors can catch common high-impact categories (reentrancy, access control errors, broken assumptions, unsafe state transitions) in real codebases, especially when trained on verified audit findings. They are not reliable at catching complex economic exploits or entirely novel attack patterns, which still require human reasoning.

2. Do AI tools replace the need for a traditional security audit?

No. AI removes a large portion of trivial or structural issues before an audit begins, but human auditors still determine exploitability, assess economic risk, and evaluate how the system behaves as a whole. AI shrinks audit scope — it doesn’t eliminate it.

3. Are AI auditing tools safe to use with proprietary or private code?

Most production tools run on private, sandboxed infrastructure and don’t train on your code. However, teams should always check the vendor’s data policy. The serious players (Sherlock AI, Olympix, Almanax) explicitly avoid training on customer repositories and isolate all scans.

4. What types of vulnerabilities are AI tools bad at detecting?

Models still struggle with:

- multi-contract interactions that require deep economic reasoning

- protocol-level incentives or MEV-related attacks

- cross-chain assumptions or oracle manipulation subtleties

- any bug class that doesn’t have strong historical examples in training data

These are the areas where auditors consistently outperform AI today.

5. How fast can a team expect feedback from an AI auditor?

Most systems run either on-command OR continuously: findings appear within seconds to minutes on each pull request. For full-repository scans, turnaround ranges from a few minutes to under an hour depending on size. This is what makes AI uniquely suited for high-iteration teams pushing changes daily.