What is Collaborative Smart Contract Auditing?

Learn how collaborative smart contract auditing combines expert-led code reviews with large-scale researcher contests to deliver deeper, broader security coverage.

Collaborative Smart Contract Auditing is when a core team of senior auditors works directly with a protocol’s developers to review code in depth, while findings are later stress-tested by a wider pool of independent researchers through open contests. This dual approach ensures both expert precision and broad coverage, reducing the chance that critical vulnerabilities slip through.

What is Collaborative Smart Contract Auditing?

There comes a point in every development cycle where a team needs to pause and ask the hard question: is this code really ready for mainnet? For most protocols, that checkpoint has traditionally been a single audit - a fixed team of researchers reviewing a snapshot of the codebase before launch. But as smart contracts have grown more complex and capital at risk has increased, protocols need a deeper, more integrated approach to security.

Collaborative smart contract auditing is a detailed, multi-layered process that brings together expert auditors, automated analysis, and close cooperation with the development team. The goal is to uncover vulnerabilities, bugs, and inefficiencies before deployment - and to work collaboratively with the client to resolve them. Through combining manual review with advanced tooling and direct communication, collaborative auditing helps teams refine their code, strengthen functionality, and launch with confidence that their contracts will perform as intended.

How Does Collaborative Auditing Actually Work in Practice?

Teams typically engage in a collaborative audit when their codebase is approaching launch and they need higher assurance than a single static review can provide. The process combines structured manual review, AI tooling, and direct collaboration between auditors and the development team to uncover vulnerabilities early and strengthen the system’s overall design.

1. Focused Review - A team of senior auditors works directly with the developers, reviewing architecture, logic, and assumptions line by line.

2. Automated Analysis - Static analysis and data-flow tracing tools are used alongside manual review to flag potential weaknesses across all code paths.

3. Validation & Remediation - Findings are triaged by severity, discussed with the development team, and addressed collaboratively to ensure fixes are correctly implemented.

Verification: Once updates are complete, auditors recheck the remediated code to confirm that vulnerabilities have been fully resolved.

The result is a codebase that has been thoroughly reviewed, verified, and refined through a shared process which leaves teams confident that what they’ve built is ready for mainnet.

When Do Teams Typically Need a Collaborative Audit?

Teams usually turn to a collaborative audit in the final stages before a major launch or upgrade, when the stakes are highest and user trust is on the line. At this stage, protocols need both the depth of senior experts and the broader coverage of open researcher contests to surface issues that might have been missed earlier. It’s the checkpoint that transforms months of development into a codebase ready for mainnet - for this reason, teams will often do multiple rounds of collaborative audits with multiple groups / organizations to make sure no bugs slip through the cracks.

Audit Contests vs. Collaborative Audits

It’s important to make note of a distinct difference between collaborative audits and audit contests. While both are designed to uncover vulnerabilities, they operate in different ways and fit different moments in a protocol’s lifecycle.

Collaborative Audits

A collaborative audit is a structured engagement where a curated team of senior auditors works directly with a project’s developers. This model is best suited for new launches, major upgrades, or when a protocol needs detailed remediation guidance. The tight feedback loop helps teams refine design choices, fix issues in context, and prepare with confidence for deployment.

Audit Contests

Audit contests open the codebase to a broad pool of independent researchers competing to find vulnerabilities. This format is best for stress-testing a mature codebase, catching edge cases that smaller teams may overlook, and applying adversarial pressure at scale. It’s less about back-and-forth with developers and more about coverage through diversity of thought.

Neither model replaces the other. Collaborative audits deliver depth and structured guidance, while contests deliver breadth and adversarial testing. When combined, they form a stronger process: protocols gain the precision of hands-on review and the resilience that comes from withstanding hundreds of independent researchers trying to break the system.

Conclusion

As smart contracts grow in complexity, the stakes for security have never been higher. Traditional audits alone can’t account for the constant evolution of live systems or the growing sophistication of onchain exploits. Collaborative auditing addresses this reality - pairing senior auditors, automated analysis, and direct developer collaboration to uncover vulnerabilities early and strengthen code before it reaches mainnet.

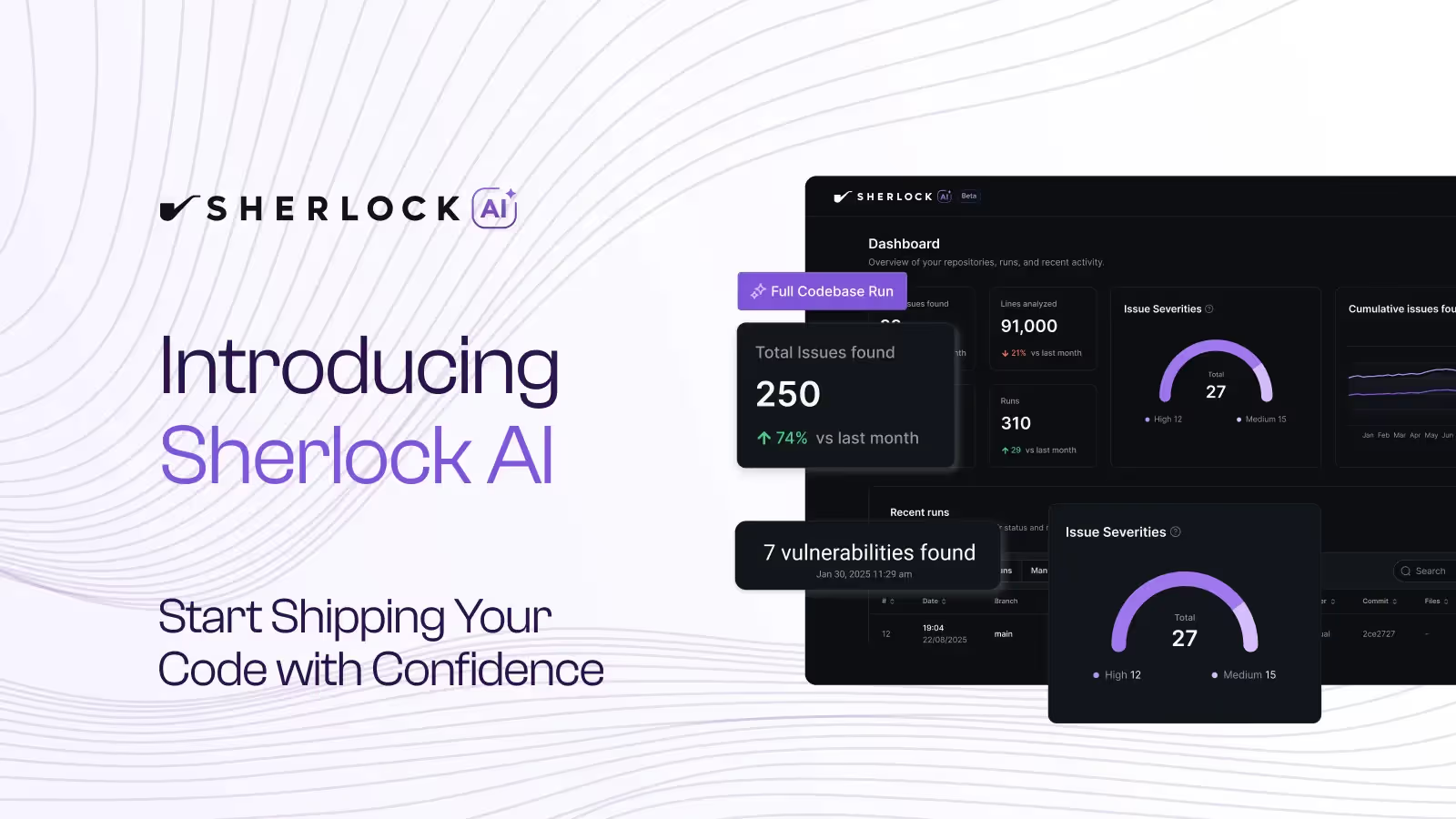

At Sherlock, we’ve built our collaborative audit framework around this philosophy. Every engagement brings together auditors with verified track records across all major Web3 verticals, matched to each project using performance data from thousands of prior findings. The result is a process designed for the realities of modern smart contract development - adaptive, thorough, and built to move with your code.

If you’re preparing for a launch or upgrade, contact us to learn how Sherlock’s collaborative auditing can help you ship securely and with confidence.