How Sherlock AI Uncovered a $2M Vulnerability on Mainnet

Sherlock AI discovered a Critical vulnerability affecting $2,400,000 in a live lending protocol. This is the first known instance of an AI uncovering a multi-million-dollar bug on mainnet.

Smart contracts live or die on the strength of their accounting. For one lending protocol preparing to go live with millions of dollars in deposits, that truth nearly played out in the worst possible way. A subtle math error in its withdrawal logic opened the door to an exploit that could have quietly drained its reserves. The total exposure was about $2 million in user funds.

The significance? It wasn’t a human auditor who found the problem. It was Sherlock AI. What follows is a step-by-step look at how this vulnerability was discovered, how it could have played out, and why it signals a shift in how Web3 projects secure their contracts.

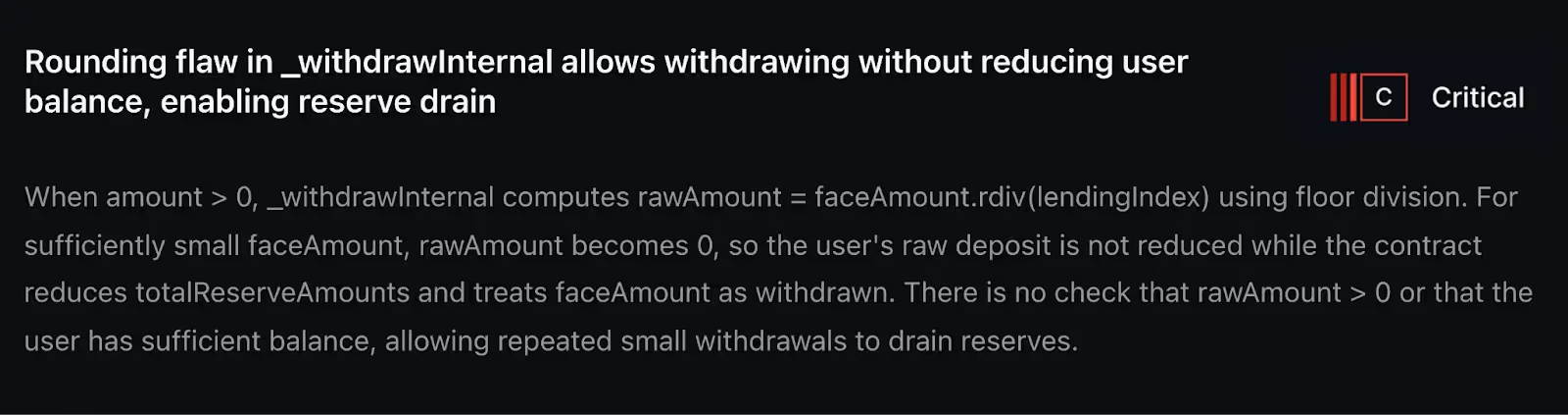

The Vulnerability

At the surface, the bug looked unremarkable. It lived inside the withdrawal function - the very place where user balances and reserves are supposed to remain perfectly in sync. When a user asked to pull out a small amount of funds, the system did two things:

- Converted the requested amount into a “scaled” unit to update the internal accounting ledger.

- Subtracted the face value from the protocol’s reserves and sent tokens to the user.

That design is standard. But in this case, the math betrayed the protocol. The scaled conversion step rounded the change down to zero for very small requests. The system happily deducted nothing from the user’s balance while still sending real tokens from the reserves.

In other words: the books showed no change, but the vault got lighter.

How the Exploit Could Have Played Out

The effect of this discrepancy is like a leaky faucet. Each withdrawal may be tiny, but the reserves drop all the same. With automation, an attacker could loop thousands of these micro-withdrawals in succession. Each call bleeds the pool just a little more, until eventually the reserves are empty.

From the outside, the protocol would look intact - users’ balances unchanged in the ledger. But behind the scenes, the reserves that backed those balances would be evaporating.

Sherlock AI’s report estimated the risk: at the time, the protocol held ~$2 million in TVL. If the bug had gone live, the attacker could have drained every dollar.

Why This Error Was So Subtle

On paper, the protocol had guardrails. Withdrawals checked user balances and debt conditions. The problem was in the assumption that scaling math could never zero out a nonzero request. That assumption fell apart when integer division and downward rounding entered the picture.

This is the kind of corner case that trips even experienced engineers. The exploit wasn’t a flashy reentrancy attack or a complex oracle trick. It was accounting drift, buried in a single math helper.

Human reviewers often spend their attention on the high-level flows—making sure debt can’t be bypassed, liquidations are safe, reentrancy is blocked. Sherlock AI, by contrast, approaches the code mechanically. It probes extreme values, runs calculations at the edges, and checks whether internal invariants still hold. That’s how it landed on this rounding issue.

The Root Cause

Technically, the bug came down to how the contract handled the conversion from “face value” (the user’s perspective) to “scaled value” (the internal ledger). A simplified version looked like this:

scaledDelta = (faceValue * RAY) / index

If faceValue was small enough, scaledDelta became zero due to integer division. When the function went on to update balances, the user’s account remained unchanged. But when it updated reserves, the full faceValue was still subtracted.

That disconnect (zero deducted internally, nonzero deducted externally) is what made the exploit possible.

The Potential Impact

Had this vulnerability made it to mainnet, the fallout would have been severe:

- Reserves drained: An attacker could have emptied ~$2M without ever posting collateral.

- Withdrawals failing: Honest users trying to withdraw would find reserves empty.

- Lending seized up: Without reserves, new borrows would fail instantly.

- Trust broken: The core promise of a lending pool (that deposits equal reserves) would collapse overnight.

In short, the protocol’s entire operation could have ground to a halt in a denial-of-service scenario, freezing legitimate user funds and shattering confidence in the system.

What Happened Next

The issue was first flagged by Sherlock AI’s automated review. The system generated a structured report that highlighted:

- the withdrawal function involved,

- the conditions under which scaledDelta dropped to zero,

- and the mismatch between balance updates and reserve updates.

Sherlock researcher @vagnerandrei98 reviewed the AI’s output, validated the logic, and escalated the finding. The vulnerability was patched before launch.

Because the discovery happened pre-mainnet, no funds were lost, and the protocol avoided what could have been a headline exploit.

Why This Case Matters

This vulnerability is important for two reasons:

- It’s a classic “small math” bug. Rounding errors and integer edge cases have caused millions in losses historically, but they are notoriously hard for humans to catch reliably. They hide in helper functions and only reveal themselves at the extremes.

- It’s the first publicly reported multi-million-dollar case caught by an AI auditor. Until now, AI systems in security have been mostly discussed as theory or demo-ware. This incident showed that AI can uncover real, material vulnerabilities in production-level code.

Looking Forward

The lesson isn’t that AI replaces auditors. It’s that AI can do what humans can’t: hammer the code with edge cases endlessly, surface strange mismatches, and hand researchers leads to investigate.

For teams shipping smart contracts, the implication is clear. One-off audits are necessary, but they’re not enough. Code changes happen constantly, and new vulnerabilities appear every time logic shifts. Continuous, automated analysis gives another layer of defense - a way to catch the small math errors before they turn into multi-million-dollar exploits.

Sherlock AI’s identification of this $2M reserve-drain bug is a milestone in that direction. It’s not hype. It’s a concrete case where AI prevented a protocol from launching with a fatal flaw.